Tired of paying $20 every month for ChatGPT Plus? Concerned about your private conversations being used to train the next AI model? What if you could have a powerful, uncensored AI assistant that runs entirely on your own computer, with no monthly fees, no usage limits, and 100% privacy?

When I saw my ChatGPT usage bill last Month, I knew there must be a better way. So I started diving into the world of local LLMs.

This is now a reality. In 2026, running a Large Language Model (LLM) like Meta’s Llama 3, Mistral AI’s models, or Qwen 2.5 on your personal PC is easier and more practical than ever. The hardware that cost $3,000 just two years ago is now accessible for a fraction of the price, and the software has become incredibly user-friendly.

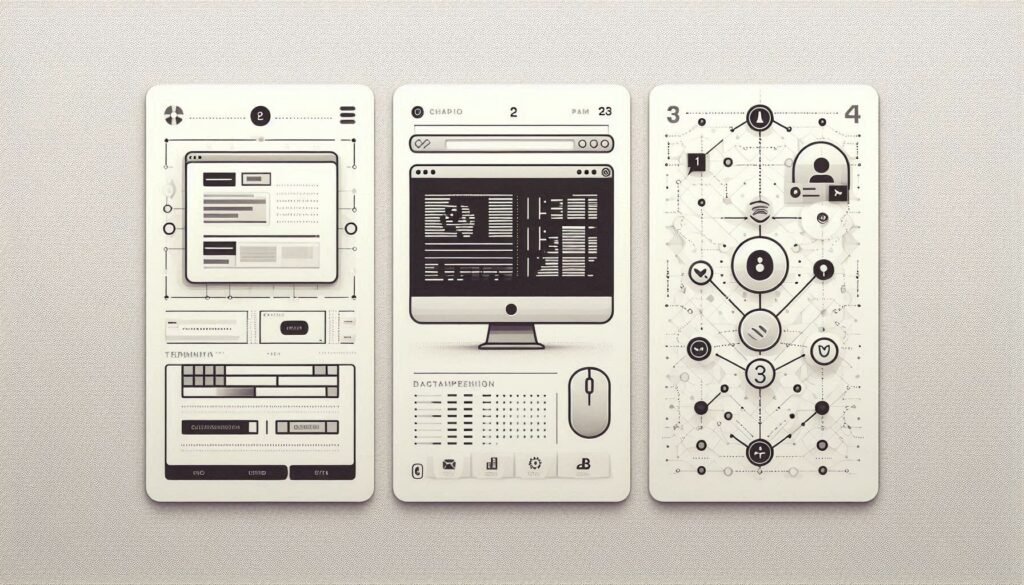

In this complete 2026 guide, we’ll walk you through three methods to install a private AI assistant on your Windows, Mac, or Linux machine: from a one-line command for beginners to a fully-featured web interface for power users. We’ll cover the exact hardware you need, provide step-by-step instructions, and help you choose the best model for your tasks.

What You’ll Achieve:

✅ Total Privacy: Your data never leaves your computer.

✅ Zero Recurring Costs: Pay only for the electricity.

✅ Unlimited Use: Generate text, code, and analysis 24/7.

✅ Customization: Fine-tune and control your AI like never before.

What You Need: 2026 Hardware Requirements

You don’t need a supercomputer, but your experience will be directly tied to your hardware. The single most important component is your GPU’s VRAM, which determines how large and capable a model you can run smoothly.

Here’s what you need to get started in 2026:

| Component | Minimum (Slow but Works) | Recommended (Good Experience) | Ideal (Fast & Powerful) |

|---|---|---|---|

| GPU (Most Important) | NVIDIA RTX 3060 12GB / AMD 6700 XT 12GB | NVIDIA RTX 4070 Ti 16GB / RTX 4080 16GB | NVIDIA RTX 4090 24GB |

| RAM | 16 GB DDR4 | 32 GB DDR5 | 64 GB+ DDR5 |

| Storage | 50 GB free (for models & software) | 100 GB+ NVMe SSD | 1 TB+ NVMe SSD (for large model libraries) |

| CPU | Intel i5 / AMD Ryzen 5 (Recent Gen) | Intel i7 / AMD Ryzen 7 | Intel i9 / AMD Ryzen 9 |

| OS | Windows 10/11, macOS 13+, or Linux |

My own test bench with an RTX 4070 Ti and 32GB of RAM, the Qwen 2.5 7B model creates and generate a full and very quick response.

💡 Pro Tip: If you have a Mac with an M1/M2/M3 chip, you’re in luck. These run LLMs very efficiently using unified memory. A Mac with 16GB of RAM can often run models that would require 12GB of VRAM on a Windows PC.

Key Takeaway: For a good, responsive chat experience with a 7-8 billion parameter model (like Llama 3 8B), aim for a setup with at least 16GB of total VRAM+RAM dedicated to the AI. The “Recommended” tier above is the sweet spot for most users in 2026.

Method 1: Ollama (The Absolute Easiest Way)

Best for: Beginners, Mac users, and anyone who wants a working AI assistant in under 5 minutes with zero configuration.

Platforms: Windows, macOS, Linux.

Ollama is a game-changer. It’s a framework that bundles a model, its weights, and everything it needs to run into a single package. You install Ollama, then run models with one command.

Step-by-Step Installation:

- Download & Install:

- Go to the official Ollama website.

- Download the installer for your operating system (Windows, macOS, Linux).

- Run the installer—it’s completely straightforward.

- Pull Your First Model:

Open your terminal (Command Prompt on Windows, Terminal on Mac/Linux) and type:bashollama run llama3.2This command downloads the latest 3.2 version of the Llama 3 model (approx. 4-5 GB) and starts a chat session. The first download will take a few minutes depending on your internet speed. - You’re Done. Start Chatting.

Once downloaded, you’ll see a>>>prompt. Type your question and press Enter. For example:text>>> Write a short Python script to sort a list of numbers.

Why Choose Ollama?

- ✅ Pros: Unbelievably simple. Huge library of models (run

ollama listto see more). Great for prototyping. - ❌ Cons: Offers less fine-grained control over settings. The chat interface is terminal-based (though you can add a GUI—see below).

To add a beautiful web interface to Ollama, install Open WebUI (formerly Ollama WebUI). It’s a one-command Docker install that gives you a ChatGPT-like experience. Find installation instructions here.

Personally, I like and use Ollama For my daily task.

Method 2: LM Studio (Best Graphical Interface)

Best for: Windows and Mac users who prefer a beautiful, no-code desktop application.

Platforms: Windows, macOS (Intel & Apple Silicon).

If the command line isn’t your thing, LM Studio is your best friend. It’s a powerful, intuitive desktop application that handles everything for you.

Step-by-Step Installation:

- Download: Go to the LM Studio website and download the latest release for your OS.

- Install & Launch: Run the installer and open LM Studio.

- Download a Model Inside the App:

- Click on the search icon on the left.

- You can search for models like “Mistral 7B” or “Llama 3.1“.

- Look for models in the GGUF file format (this is the standard for local LLMs). Select a model and click “Download”.

- Load the Model & Chat:

- Go to the “Chat” tab on the left.

- In the top dropdown, select the model you just downloaded.

- Click “Load”. Once the progress bar is full, start typing in the bottom text box!

Why Choose LM Studio?

- ✅ Pros: Stunning, user-friendly GUI. Built-in model hub. Easy to switch between models. Excellent for casual use and experimentation.

- ❌ Cons: An application you must download and update. Slightly less flexibility for ultra-advanced users.

Maybe it felt wrong but i really loved this Mistra 7B, the balance of speed, intelligence, and its uncensored, logical approach to problem-solving is, in my opinion, unmatched for general use.

Method 3: Text Generation WebUI (Most Powerful & Flexible)

Best for: Advanced users, tinkerers, and researchers who want maximum control, extensions, and features.

Platforms: Windows, Linux (macOS can be tricky).

This is the “Automatic1111 of text generation.” It’s a comprehensive, web-based interface (like the Stable Diffusion WebUI) with an insane number of features and extensions.

Step-by-Step Installation (Windows):

- Install Prerequisites: Ensure you have Python 3.10 and Git installed. (You likely have these from your Stable Diffusion setup).

- Clone & Run: Open a command line in the folder where you want to install it and run:bashgit clone https://github.com/oobabooga/text-generation-webui cd text-generation-webui start_windows.batThe first run will install all dependencies.

- Download a Model:

- Download a model in GGUF format from a site like TheBloke’s page on Hugging Face.

- Place the downloaded

.gguffile in thetext-generation-webui/models/folder.

- Load the Model in the WebUI:

- In the “Model” tab, click “Refresh”, then select your model from the dropdown.

- Click “Load”.

- Switch to the “Chat” or “Text generation” tab to start using it.

Why Choose Text Generation WebUI?

- ✅ Pros: Unmatched features: character cards, chat histories, countless extensions, advanced generation parameters, support for LoRA adapters.

- ❌ Cons: Setup is more technical. The interface can be overwhelming for beginners.

Choosing Your First AI Model (2026 Recommendations)

The “best” model depends on your hardware and needs. As of early 2026, here are the top contenders:

| Model (Size) | Best For | Speed | Quality | Recommended VRAM |

|---|---|---|---|---|

| Llama 3.2 1B / 3B (Instruct) | Low-end hardware, instant responses, simple tasks. | ⚡⚡⚡⚡⚡ Very Fast | ⚡ Decent | 4 GB+ |

| Qwen 2.5 7B (Instruct) | Best all-around 7B model. Great coding, strong reasoning. | ⚡⚡⚡⚡ Fast | ⚡⚡⚡⚡ Very Good | 8 GB+ |

| Mistral 7B v0.3 (Instruct) | Balanced mix of speed, intelligence, and efficiency. | ⚡⚡⚡⚡ Fast | ⚡⚡⚡ Good | 8 GB+ |

| Llama 3.1 8B (Instruct) | Strong general knowledge and instruction following. | ⚡⚡⚡ Fast | ⚡⚡⚡⚡ Very Good | 8 GB+ |

| Command R+ 35B (4-bit quantized) | High intelligence for complex tasks. Best if you have RAM/VRAM. | ⚡⚡ Slow | ⚡⚡⚡⚡⚡ Excellent | 16 GB+ |

Start with a 7B model (like Qwen 2.5 7B or Mistral 7B). They offer an excellent balance of capability and speed on modern hardware. Use the 4-bit quantized versions (look for -Q4_K_M in the filename) for the best performance/memory trade-off.

First Steps & Prompting Your Local AI

Local models work slightly differently than ChatGPT. To get the best results, structure your prompts clearly.

Instead of: "write a poem"

Try: "You are a creative poet. Write a short, four-stanza poem about a robot learning to feel joy."

Key Prompting Tips:

- Give a Role: “You are an expert Python programmer…”

- Be Specific: Clearly state the format, length, and style you want.

- Use System Prompts (if available): Many interfaces have a “system prompt” box. This is where you set the AI’s permanent behavior for the conversation.

Troubleshooting Common Issues

- “Out of Memory” / CUDA Errors: Your model is too large for your GPU.

- Solution: Download a smaller model (switch from 13B to 7B) or a more heavily quantized version (e.g., Q4 instead of Q8). In your loading software, also try enabling “cpu-offload” or “auto-devices” settings.

- Slow Generation: This is normal on lower-end hardware.

- Solution: Ensure you’re using a GGUF model with GPU offloading enabled. In Text Generation WebUI, increase the

n-gpu-layerssetting to offload more work from the CPU to the GPU.

- Solution: Ensure you’re using a GGUF model with GPU offloading enabled. In Text Generation WebUI, increase the

- Model Gives Nonsense Answers: You might be using a “base” model, not an “instruct” model.

- Solution: Always download models with suffixes like

-Instruct,-Chat, or-v0.1-IQ4_XS. These are fine-tuned to follow instructions.

- Solution: Always download models with suffixes like

Conclusion & Your Local AI Journey

You now have the keys to a powerful, private, and free AI assistant. Whether you chose the simplicity of Ollama, the beauty of LM Studio, or the power of Text Generation WebUI, you’ve broken free from API limits and privacy concerns.

Your next steps:

- Experiment: Try different models from the same family to see which “voice” you prefer.

- Integrate: Look into tools that can connect your local LLM to other applications.

- Go Full Local: Pair this local text AI with your locally-run Stable Diffusion (from our previous guide) to create a complete, private AI workstation on your PC.

The era of personal, sovereign AI is here. Welcome to it.

🔗 Related Articles You Should Read Next

If you’re setting up a private AI assistant, you’re building your own AI toolkit. Explore these guides to take the next step, whether it’s generating images, mastering prompts, or finding the perfect model for your needs.

🧠 Mastering AI Chatbots & Prompts

- How to Get Better Answers from ChatGPT: A Guide to Prompt Framing – Apply these prompt engineering techniques to your new local AI for vastly improved results.

- ChatGPT Prompts: 12 Copy-Paste Templates (Better Results Fast) – Use these proven templates as a starting point for your private AI’s conversations.

- What Is ChatGPT? Beginner Guide + Real Examples (2026) – A foundational guide that helps explain the core concepts behind the AI you’re now running locally.

🛠️ Building Your Complete Local AI Workflow

- Complete AI Workflow Under $50/Month: 2026 Guide – See how your new local AI assistant fits into a full, cost-effective productivity system.

- How to Install Stable Diffusion Locally (Complete 2026 Guide) – Pair your private text AI with a private image generator for a fully self-contained creative suite.

- Beyond Chatbots: A 2026 Guide to AI for Images, Video, Audio, and Code – Expand your local AI capabilities beyond text into other creative and technical domains.

⚖️ Choosing the Right AI Tools & Models

- Claude 3 vs GPT-4 vs Gemini 2.0: Which AI is Best for 2026? – Understand the landscape of leading cloud models to appreciate the strengths of the open-source models you can run locally.

- 7 Free AI Tools That Beat Paid Software in 2026 – Discover more powerful free tools to add to your arsenal alongside your local LLM.

- The Underrated AI Power User’s Tool: Mastering Perplexity.ai for Research and Content – Learn how to use a superior web-based research assistant to gather information that your local AI can then help you process and write about.

🔧 Troubleshooting & Advanced Applications

AI Video Generation 2026: Complete Guide to Tools That Actually Work – The next frontier after text and images. Explore the current state of generating video with AI.

ChatGPT Not Responding? 7 Fixes That Work (2026 Guide) – Many troubleshooting principles for cloud AI also apply to keeping your local setup stable and responsive.

No Comments